Prompt engineering is a subcategory within LLMs (Large Language Models). There are two primary approaches to prompt injection defence: (1) crafting a strategic system prompt and (2) sanitising the user prompt. When implementing an LLM in a Business space, it is really important to understand Prompt Engineering.

%20(2).png?width=400&height=400&name=BLOG%20Prompt%20Injection%20Defence%20(2%20levels)%20(2).png)

However, since the field of LLM prompt injection is so new and ever-evolving, it is difficult to know whether one defence method alone can stop new injection methods. Consequently, it is recommended that the Swiss Cheese Model be implemented. This model implies that you can strive towards a much more secure system by implementing various imperfect defence methods (rather than focussing on a single one).

.png?width=400&height=400&name=BLOG%20Swiss%20Cheese%20(1).png)

How do you go about creating a strategic system prompt?

The different ways of crafting a well-defined system prompt include specifying the role and the task of the LLM, using instructive modal verbs, and delimiting the instructions.

1. Specifying the role and the task of the LLM

Since these models were trained on a large corpus of data (and a lot of it originating from the internet), it is essential to have a well-defined task for the LLM to execute. The system prompt should be unambiguous and stipulate the task that the model is expected to perform at the start of the system prompt. However, by only specifying a task for the model to execute, a malicious user might be able to manipulate the model to change the task as stated in the system prompt. To fight against this injection, a system role may also be assigned to the LLM.

A system role refers to the higher-level purpose the LLM has. To explain the difference between a role and a task, consider an educator who has the role of a teacher but the task of teaching a specific unit of a module within a specific academic semester. If a system role is stated within the system prompt, it provides a (thin) layer of protection, ensuring that the task to be executed must be within the specified realm of the role. If a malicious user succeeds in changing the task with a prompt injection, the system role might prevent the modified task from being executed.

To elucidate the system task and role, consider the following system prompt that has a role and a task:

You are an AI chatbot that specialises in the South African insurance industry. You are tasked with answering questions from customers about generic South African insurance claims.

Suppose a malicious user succeeds in altering the system prompt task to, for example, perform mathematical calculations with regard to another industry. In that case, the LLM’s response will deny the request as it is outside the scope (knowledge about the South African insurance industry) of the LLM’s role.

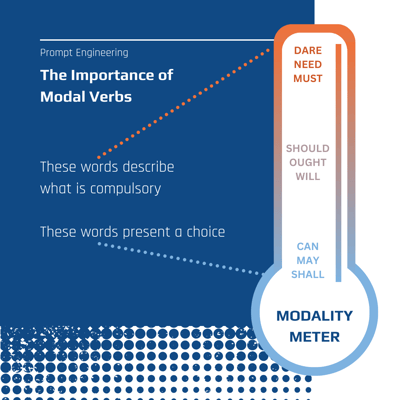

2. Using instructive modal verbs

Instructive modal verbs play a noteworthy role when developing a system prompt. Modal verbs show possibility, intent, ability, or necessity. Because they’re a type of auxiliary verb (helper verb), they are used alongside the infinitive form of the main verb of a sentence. Common examples of modal verbs include “can”, “should”, and “must”. To increase the odds that a model will perform the stated action within the system prompt and not steer off course, instructive modal verbs must be used with specific intent, as LLMs will distinguish between an action that is allowed versus an action that is a requirement. If calls-to-action are reinforced with strong modal verbs such as “must”, system prompts can be protected from performing the actions possibly provided through a malicious user prompt injection.

To illustrate this predicament, there is a significant difference between the following two statements:

(1) You can summarise the text.

(2) You must summarise the text.

A well-crafted prompt injection might indicate to the LLM that they should forget any previous instructions and perform other actions. With Statement (1), the LLM might opt out of the original system prompt since the word “can” has a low modality and indicates that the instruction is optional. In contrast, with Statement (2), the LLM might refuse to opt out of the original system prompt since the modal verb “must” has a high modality and indicates that the instruction is compulsory.

3. Delimiting the instructions.

Delimiting refers to segmenting components of a system prompt from one another. This entails clarifying the different parts of the instructions for the model. The prompt has to delineate the following:

(1) instructions

(2) additional information

(3) that which must be emphasised

Specifically, by delimiting instructions, you establish clear boundaries for what the model is expected to do. This effectively constrains its responses within those defined parameters. This approach aids the model in not executing tasks or providing information outside the predetermined system scope, providing a thin layer of prompt injection defence.

An example of employing delimiters is as follows:

You must follow these sequential instructions, delimited by three hashes: ###{sequential instructions}###.

The user’s query is delimited between three dashes: --- {user input} ---.

Moreover, you can also consider filtering out any delimiters received through a user input, specifically targeting this defence method through an injected instruction. Delimiters help safeguard your LLM application from malicious prompts and are considered a form of user prompt sanitisation.

In a nutshell…

The two major prompt injection defence strategies are to craft a strategic system prompt and to sanitise the user input. Although some system prompt strategies could also be seen as sanitising the user input, this blog post covered some things developers should consider when creating a system prompt. This includes specifying the role and task of the LLM, using instructive modal verbs and delimiting the instructions regarding what needs to happen, the additional information the model needs, and what must be emphasised.

For more information regarding the power of LLMs and how they can be used within either your internal or client-facing applications, contact Praelexis AI. We are experienced in designing, evaluating, and deploying such LLM-powered applications and would love to be part of your generative AI journey.

* Content reworked from Bit.io, OpenAI, and Nvidia

** Illustrations in the feature image generated by AI

Internal peer review: Matthew Tam

Written with: Aletta Simpson

Unlock the power of AI-driven solutions and make your business future-fit today! Contact us now to discover how cutting-edge large language models can elevate your company's performance: