With LLMs (Large Language Models) disrupting the world of machine learning, prompt engineering has become an important field.* Prompt engineering is vital in safeguarding an LLM for business use. Within the field of Prompt Engineering it is essential to understand the difference between a system prompt and a user prompt, as well as how user prompts can potentially be dangerous.

What does prompt engineering entail?

Prompt engineering is carefully crafting input queries to guide and control the responses generated by AI models — such as Large Language Models (LLMs) — ensuring they provide the desired and contextually relevant responses. These input queries are also known as prompts.

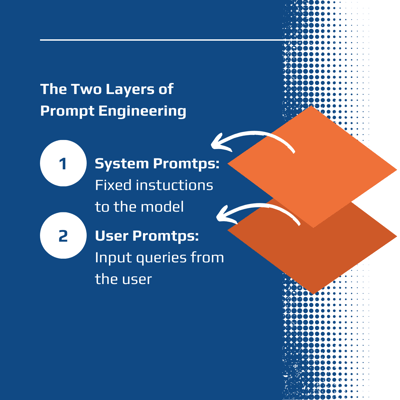

Prompt engineering for conversational LLM applications comprises two layers of prompts: (1) A fixed system prompt providing context and instructions to the model, and (2) a user prompt serving as the input query from the end user (e.g. a customer that is interacting with a chatbot). Collectively, these two types of prompts shape the LLM application’s input.

1. What are system prompts?

A system prompt is a fixed prompt providing context and instructions to the model. The system prompt is always included in the provided input to the LLM, regardless of the user prompt. The idea is that the system prompt should not be editable by any party other than the developers. Developers use these prompts to constrain the LLM’s answers in the following ways:

- The answer has to fit within a specific realm (eg. Retail).

- The answer has to form part of the fulfilment of a specific task.

- Before answering the LLM has to consider a specific context (e.g. South Africa).

- The response has to be in a sought-after style.

2. What are user prompts?

User prompts are the input queries from the user. This is how the customer interacts with an LLM application, and it enables the user to shape this application’s input. Often, this will include the question the user wants answered.

Why are some user prompts dangerous?

For most conversational LLM applications, the user prompt is expected to be a question regarding a specific subject. However, not all users have good intentions. Malicious actors may attempt to use prompt injection methods to overwrite the system prompt, effectively hijacking the LLM application. Luckily, there are ways of safeguarding your LLM.

In a nutshell…

Prompt engineering forms a part of the LLM field. System prompts and user prompts together shape the LLM’s input. A system prompt is a set of fixed instructions created by the developers to constrain the LLM’s response. The constraint is the realm, task, context and style. The user prompt is the query that your user feeds the LLM. User prompts can entail certain dangers.

For more information regarding the power of LLMs and how they can be used within either your internal or client-facing applications, contact Praelexis AI. We are experienced in designing, evaluating, and deploying such LLM-powered applications and would love to be part of your generative AI journey.

* Content reworked from Bit.io, OpenAI, and Nvidia

** Illustrations in the feature image generated by AI

Internal peer review: Matthew Tam

Written with: Aletta Simpson

Unlock the power of AI-driven solutions and make your business future-fit today! Contact us now to discover how cutting-edge large language models can elevate your company's performance: